HEXperience of a lifetime – unveiling innovative student startups

10 Apr 2024

Earlier this year, a group of ANU students travelled to HEX International Singapore, a two-week immersive program for idea-stage founders to refine their start-up ideas and learn from leaders in business, social...

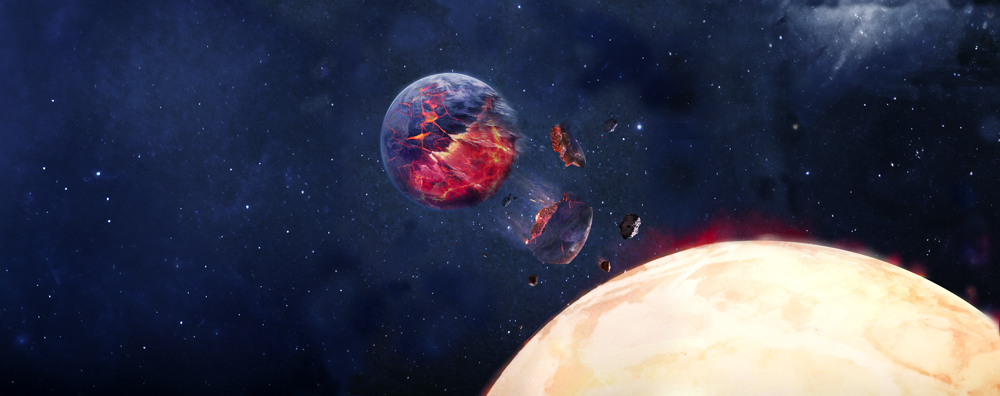

Astrocomputing detectives uncover planet-eating stars

21 Mar 2024

New research substantiates the mind-bending astrophysics behind the Netflix sci-fi saga Three-Body Problem and solves an interstellar murder mystery billions of years in the making.

Computing Internship Information Session Semester 2 2024

12 Mar 2024

Learn about taking an internship as part of your Computing program in Semester 2 2024.

School of Computing Induction

15 Feb 2024

Come along to the ANU CECC School of Computing induction event for Semester 1, 2024 during O-Week.

CECC Computing Tours O-Week S1 2024

12 Feb 2024

Join us for a CECC precinct tour during O-Week!

Computers to fight disease by predicting quantum chemistry

1 Feb 2024

A fortuitous encounter at the Australian National University (ANU) spawned QDX—a new start-up taking the biotech industry by storm